Artificial intelligence is no longer a futuristic concept or a tool exclusive to the most advanced companies. For enterprises globally, AI is becoming a central part of business transformation, enabling smarter decision making, greater efficiency and enhanced competitive advantage. But how are businesses adopting AI, and what challenges do they face in scaling it effectively?

To answer these questions, we conducted the Dell Technologies Enterprise AI Adoption Survey, covering 3,800 IT decision makers (ITDMs) and AI practitioners across five countries. Our findings give insight into the current state of global AI usage and the accelerators helping enterprises fulfill their AI business objectives and realize value. Keep reading to learn about five key insights that emerged from our research.

1. Data is the key to getting your AI house in order

AI is only as good as the data it runs on, and enterprises are struggling to manage and leverage their data effectively. Businesses cited data quality, availability, management and security as the top technical barriers to AI implementation.

Without clean, well-organized and accessible data, even advanced AI models will underperform. These challenges suggest organizations should focus on developing strategies that prioritize seamless data integration, enhanced security measures and scalable solutions for large datasets.

2. Flexibility is a must for AI workload placements

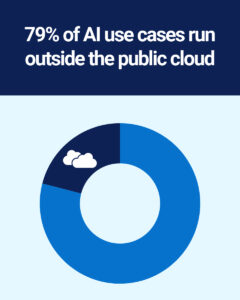

Our research shows a multicloud approach is an attractive option for enterprises looking to run their AI workloads:

Our research shows a multicloud approach is an attractive option for enterprises looking to run their AI workloads:

- 79% of AI use cases in production are running outside the public cloud

- 98% of organizations have calculated total cost of ownership (TCO) for AI use cases in the public cloud

AI workloads with the biggest impact are often tied to data that still sits in your four walls. Public cloud offers convenience for experimenting, but comes with a hefty price tag: a 2024 ESG analysis found that on-prem inferencing can be up to 75% more cost-effective than the public cloud. For scalable long-term AI investments, on-prem solutions often enable better ROI, which most organizations have discovered after calculating their TCO. But it’s not all dollars and cents: on-prem deployments also offer better security and governance, which is especially helpful for sectors with strict compliance needs like finance and healthcare. This highlights the need to carefully consider TCO for different use cases and place AI workloads with the right balance of performance, security and scalability.

3. Increase data center efficiency by tapping into available power

Despite the growing discussion around power and cooling as a bottleneck to AI adoption, our survey indicated enterprises may overlook trapped power and available solutions today:

- 39% of data center power is not utilized

- 67% of GPU servers will use direct-to-chip liquid cooling in the next three years

While data center operators fret about energy requirements to scale AI, they are leaving a lot of power on the table. Enterprises should maximize their existing data center power capacity before investing in costly retrofits or new construction. Additional trapped power can also be liberated by upgrading existing infrastructure from 14G to 16/17G rack servers.

Looking ahead, growing adoption of innovations like direct-to-chip liquid cooling will be a key factor in addressing GPU power and performance constraints, while lowering data center cooling costs and increasing scalability for enterprise AI deployments.

4. Bias towards open-source and need for support in deploying on-prem AI workloads

Organizations are moving toward greater transparency and flexibility in their AI ecosystems, as well as providers who offer a one-stop shop:

Organizations are moving toward greater transparency and flexibility in their AI ecosystems, as well as providers who offer a one-stop shop:

- 63% of AI use cases are expected to use open-source models in the next 12 months

- 77% of organizations want their infrastructure vendors to provide capabilities across all aspects of the AI adoption journey

- 83% of organizations want their AI PC vendors to provide capabilities across all aspects of the AI adoption journey

This shift toward open-source frameworks highlights the demand for customizable, transparent and cost-effective solutions. Open ecosystems deliver broad capabilities beyond what any single vendor can provide. To meet their specific needs, IT decision makers are seeking vendors with end-to-end AI solutions that can help them fully integrate AI across their entire IT estate.

5. Small models running on-device offer another level of flexibility for AI use cases

AI PCs are an attractive option for democratizing access to AI, especially by leveraging small language models (SLMs):

AI PCs are an attractive option for democratizing access to AI, especially by leveraging small language models (SLMs):

- 35% of organizations plan to test SLMs (small language models) on AI PCs in the next 12 months

Unlike their larger counterparts, SLMs are more cost and energy-efficient, requiring less computational power while being sufficient for many applications such as coding assistants.

What could this mean for businesses? SLMs enable real-time, on-device AI processing, which not only reduces latency but also improves productivity and minimizes environmental impact. Enterprise adoption of AI PCs could further enhance the accessibility of AI solutions for teams of all sizes, empowering employees with powerful tools for collaboration and automation.

Empower Your AI Journey with Confidence

What does this mean for enterprises today? Based on our findings, the key accelerators of AI adoption are:

- Evaluating TCO to optimize cloud, on-prem and edge deployment mix based on the use case

- Improving data quality, access and management

- Better utilizing existing data center power capacity for AI workloads

- Embracing open-source models and frameworks for collaboration and innovation

- Running SLMs on AI PCs to enhance efficiency and accessibility

AI has become essential, not optional, for driving competitive advantage and operational excellence in today’s business landscape. While it has the potential to drive widespread efficiencies and breakthrough innovations, an organization’s success will be determined by the choices it makes now.

Want to take the next step? At Dell Technologies, we’re here to help you build an AI strategy that works for your business. Contact us to learn more about our AI solutions and how they can empower your team to achieve more.